Brendan OConnell

Software Engineering / DevOps / Data Science

Software Engineering / DevOps / Data Science

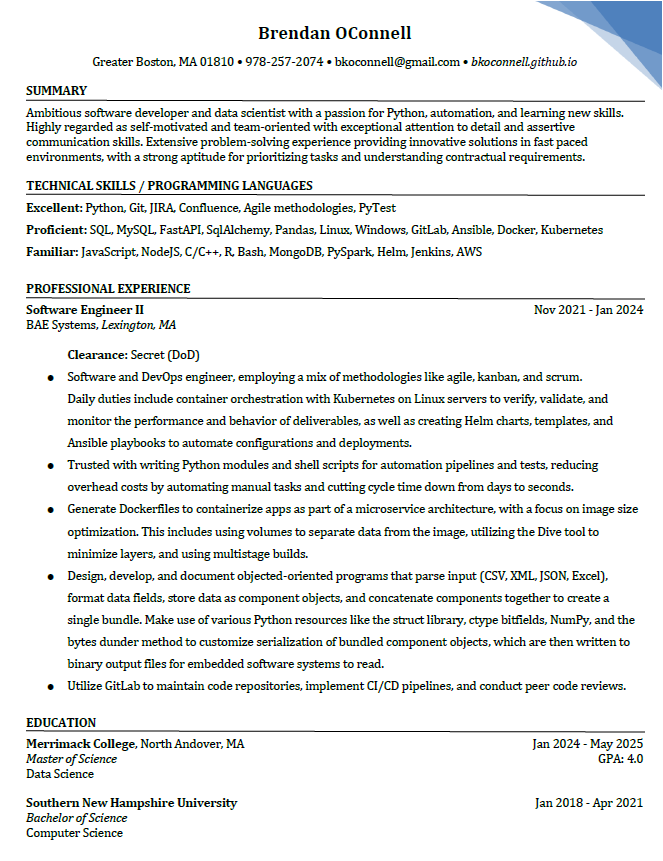

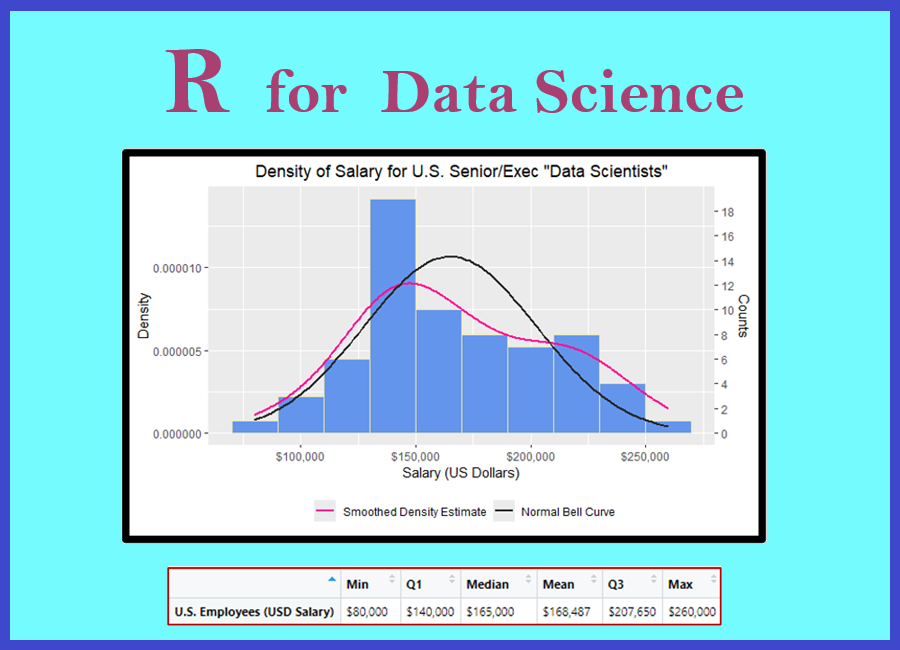

This R program is my midterm project for an R Data Science graduate class.

In addition to the R program, there is a PowerPoint presentation that highlights the project requirements, the data preparation, the analysis with data plots & statistics, and the final "recommendation" to present to the fictional CEO.

For the project's source code and the Powerpoint or PDF presentation slides, please visit my Github repository:

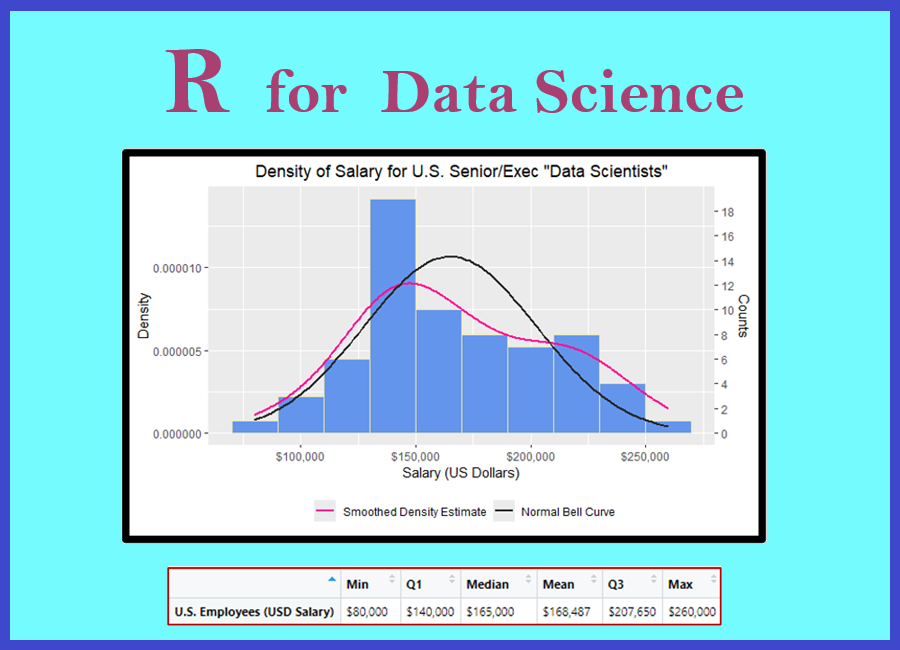

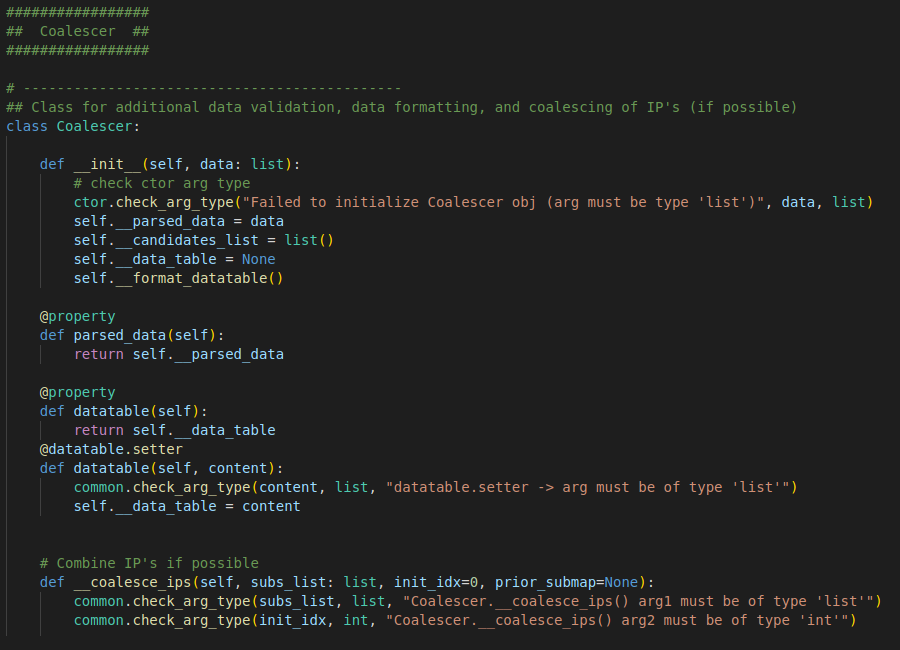

This application is a solution to a practice exercise with subnets. It is written in Python utilizing OOP with several modules that handle various tasks. An input datafile was provided with several thousand lines of subnet data.

The objective: to read and parse the input file, combine all possible subnets on each line into the least amount of subnets possible, format the data accordingly, and write the formatted data to a CSV file.

Instead of providing a requirements document with formatting specs, an example output file was given to me. The instructions were to produce a solution output file that's identical to the example output file, therefore some trial & error was involved to determine the appropriate formatting. The input data includes invalid subnets, so data validation was critical. Also, subnets can only be coalesced (combined) if they are contiguous and have the same subnet mask.

To view the intricacies of the formatting, validation, and coalescing requirements, please refer to my comments in the coalesce_ips and format_datatable methods of the coalescence.py module.

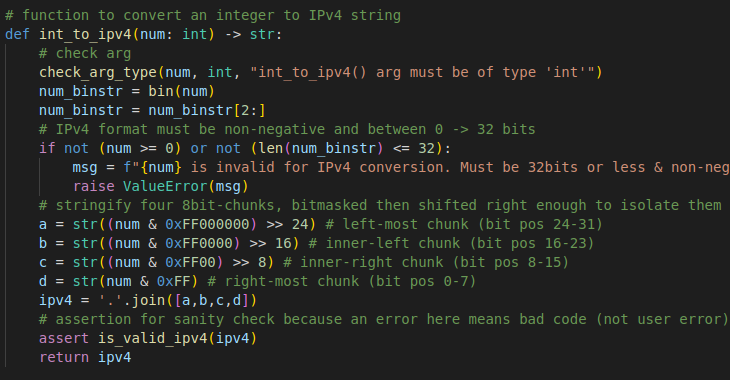

My favorite highlights from this assignment are the common functions in the common.py module. These functions range from binary math (setting bit, getting bit length, finding lowest set bit, etc.), to intricate conversions (IPv4 mask to CIDR notation, int to IPv4 and vice versa), to data validation (valid IPv4 format, valid mask value, etc.), and customized exception handling.

Other highlights include custom generator functions, a custom parser for the subnet data, and thorough unit testing. But the most impressive highlight is certainly the custom algorithms for coalescing IP's and formatting the data through an intricate yet efficient series of conditional statements, helper functions, and recursive functions, which utilize data structures like nested lists and dictionaries to handle the extensive formatting requirements.

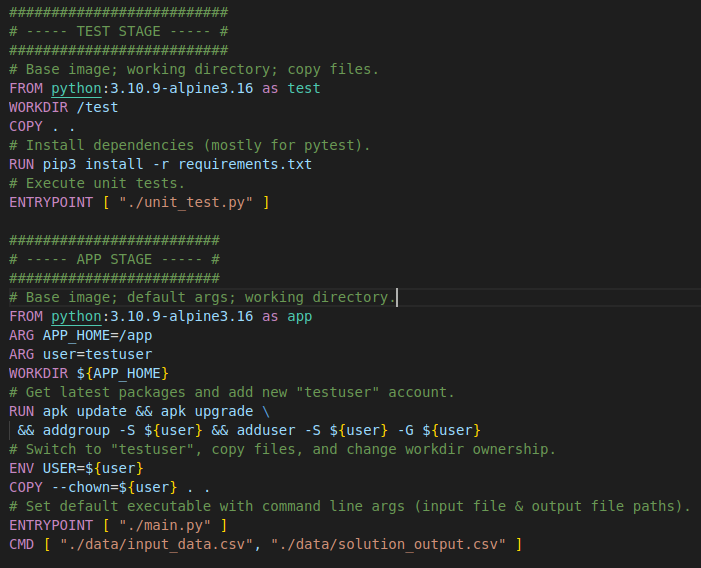

Please feel free to review my coalescer algorithm source code here.Another requirement of this assignment was to create a Dockerfile with some specific requirements. As a bonus, I created unit tests using PyTest. To incorporate the unit tests, I created a multi-stage Dockerfile, with the 1st stage for testing and the 2nd stage for the Coalescer application.

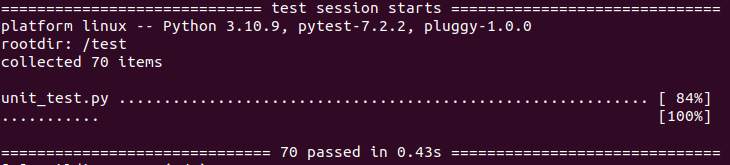

The test stage installs the dependencies needed for PyTest, then executes the unit tests. You can view the source code for the unit tests here.

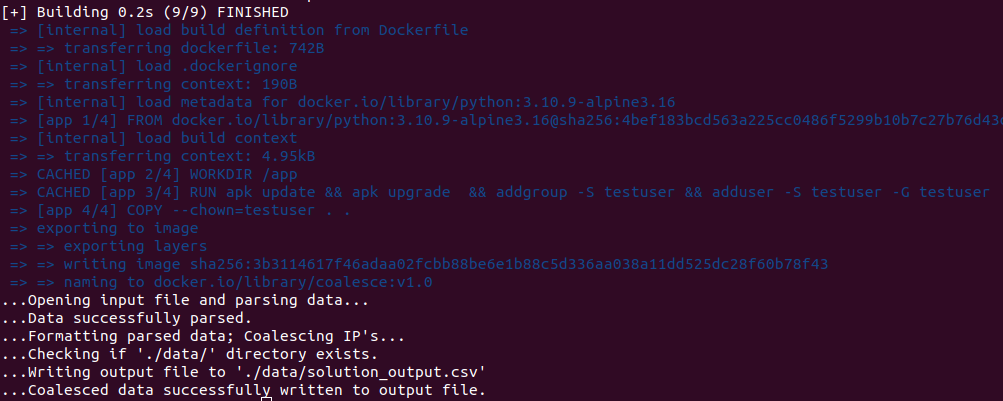

The app stage addresses the specific requirements for the assignment. The docker image must have minimal layers and be as small as possible. It also must update packages, create a new user, then allow the application to be run as the new user. When running the program, command line args must be passed for the input data and solution output filepaths.

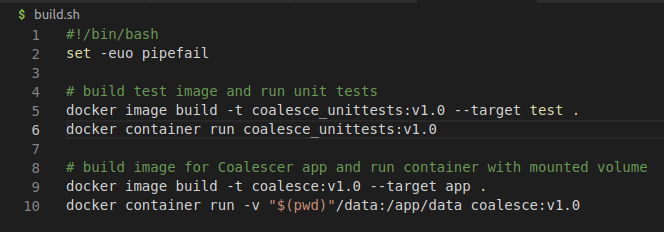

The final requirement was to write a shell script that builds the docker image and runs the container with a mounted volume for persistent data (e.g. the solution output file).

As seen in the above screenshot, I added a section in the build script that builds the test image and runs the corresponding container prior to building the app image. That way, you can confirm that all tests pass before running the app.

Test:

App:

For the project's source code, please visit my Github repository and feel free to follow the Readme instructions for running the application:

My MEAN stack project based on the final project for CS-465-Full-Stack.

This JavaScript project builds a MEAN full stack application with an MVC design pattern and a RESTful API back-end service. The Node.JS platform sets up the back-end server, which hosts dynamic web content by utilizing Express Handlebars as the view engine. Express routers map all incoming requests to the appropriate controllers, which contain the program's logic. The model schema is created using Mongoose ODM, which sets the body structure for requests coming through the API service interacting with the Mongo database.

A front-end administration page is created using Angular, setup as a single page application (SPA), providing a highly interactive interface for the client. Lastly, Node.JS security packages assist with implementing user registration and user authentication logic, including the use of JSON web tokens to verify user access for ADD/EDIT/DELETE functionality in the 'Flowers' database collection.

My project improves upon the original class project, and the content for my project is original. It is based on a real company, with their permission, but does not represent their official website.

Click here to view a slide presentation that highlights key features of this project: https://github.com/bkoconnell/bkoconnell.github.io/raw/master/assets/presentations/MEAN_stack.pptx

For the source code, please visit my Github repository:

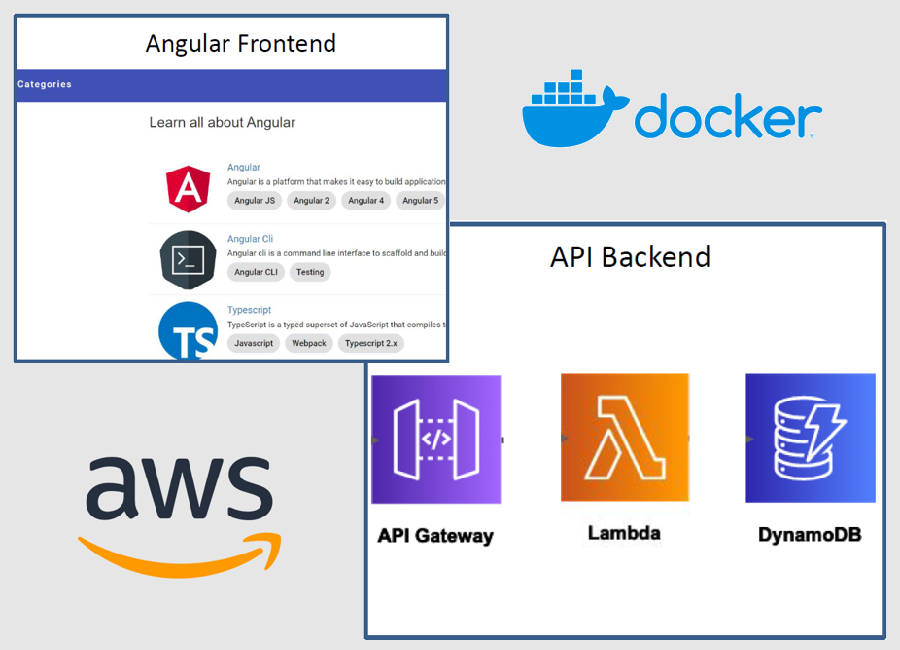

Final project for my CS-470 Full Stack II class.

This project migrates an existing MEAN full stack application to the AWS cloud. Docker is used for containerizing the Angular frontend. The container is dropped into an S3 bucket in the AWS console for deployment to a serverless cloud host. The backend API is refactored using API Gateway, Lambda functions, and AWS security features. The database is built from scratch with DynamoDB NoSQL tables.

For a detailed review of this project, please watch my final project presentation here:

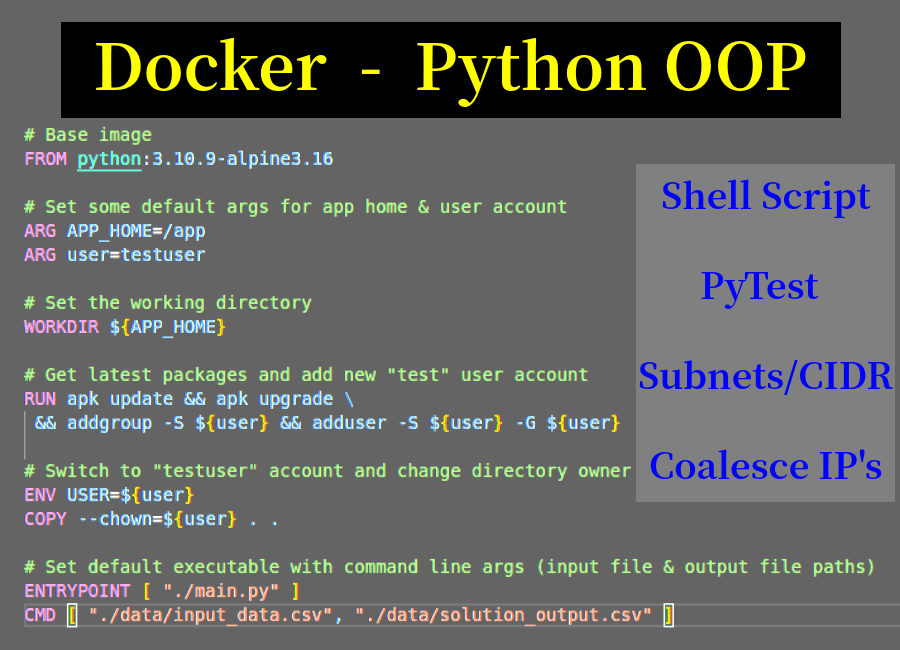

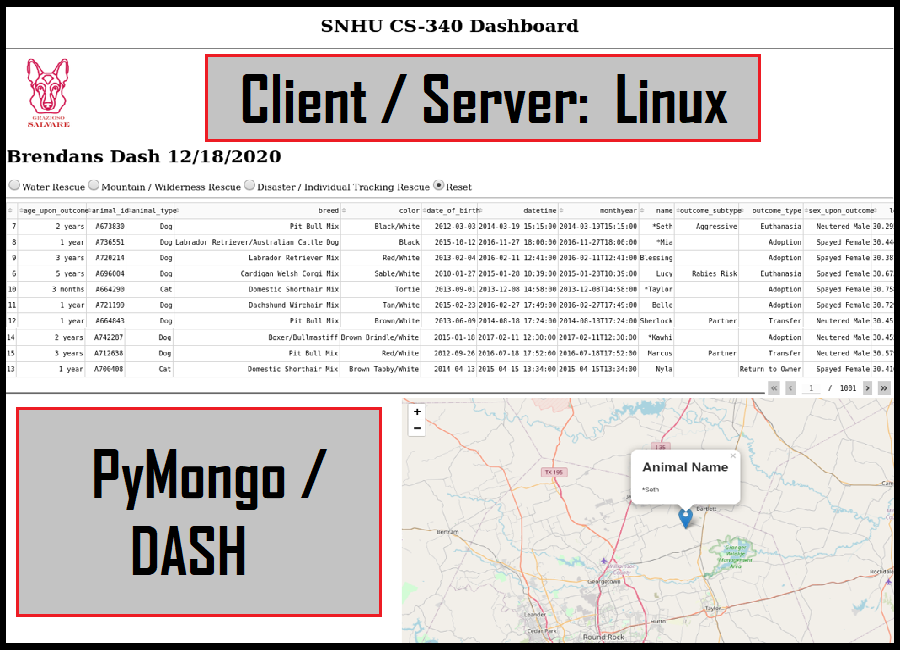

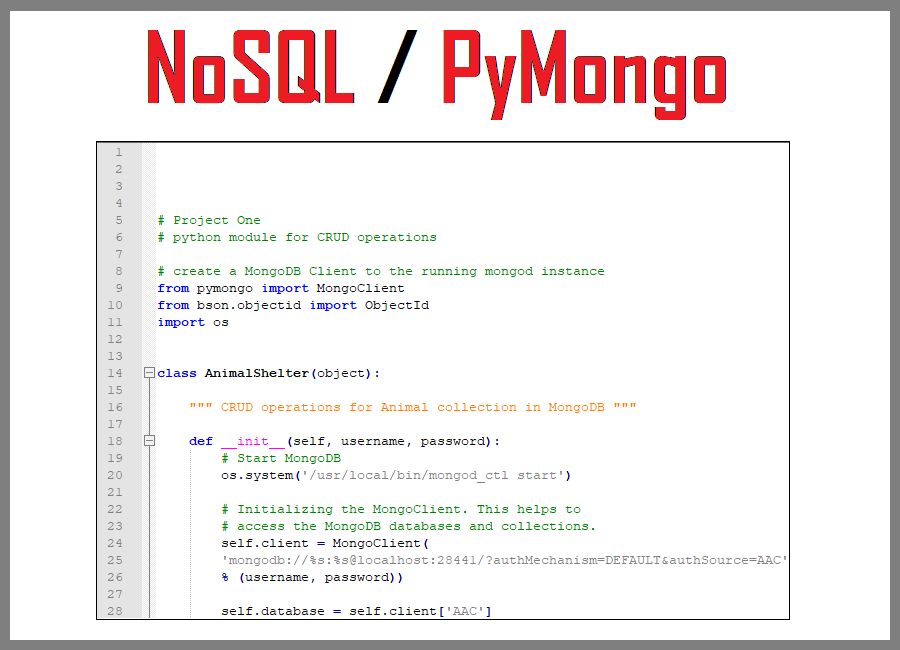

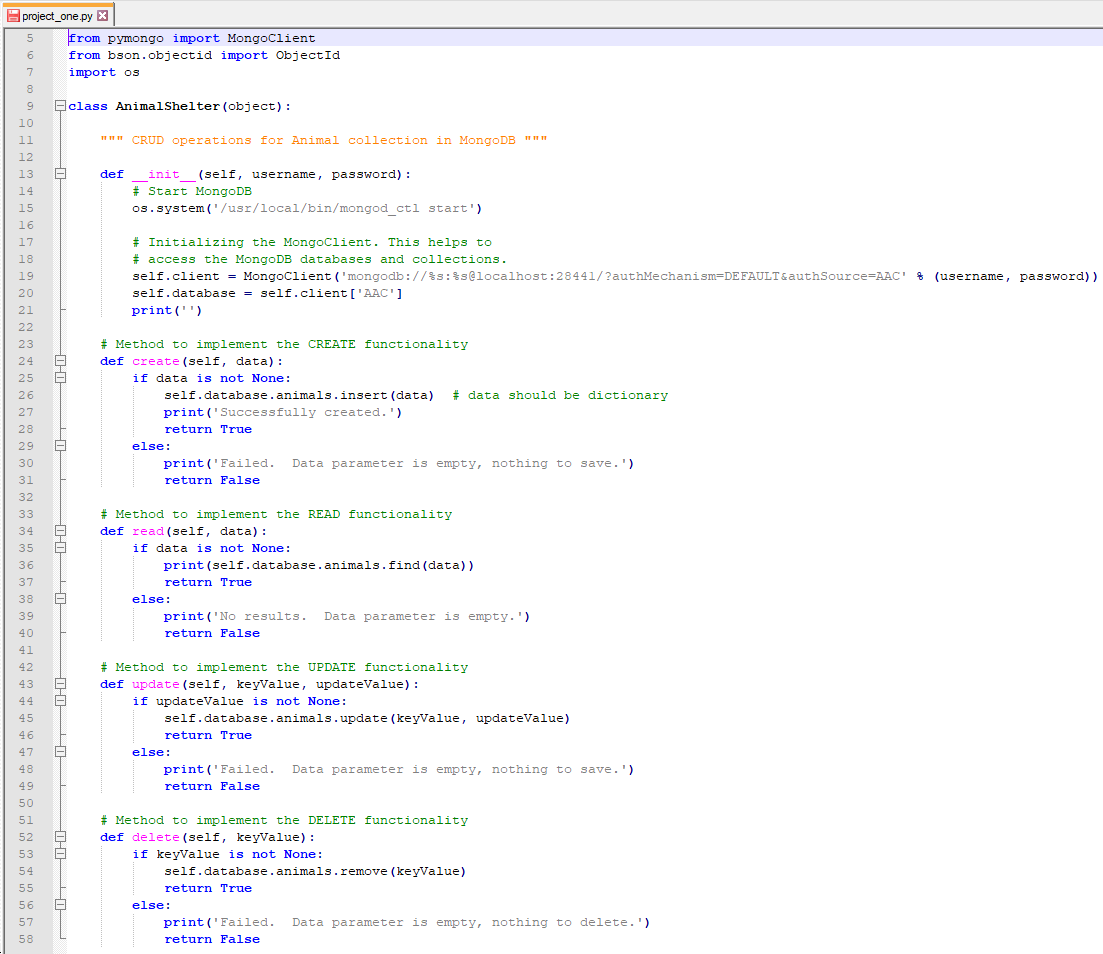

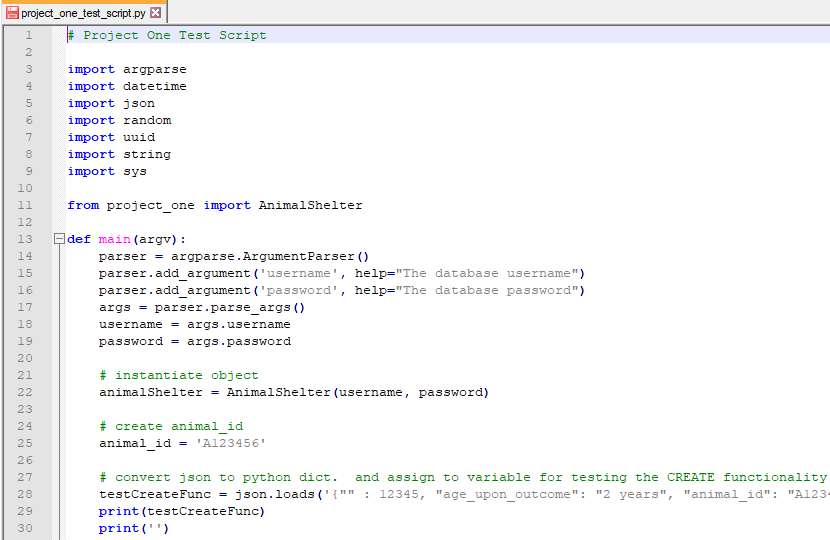

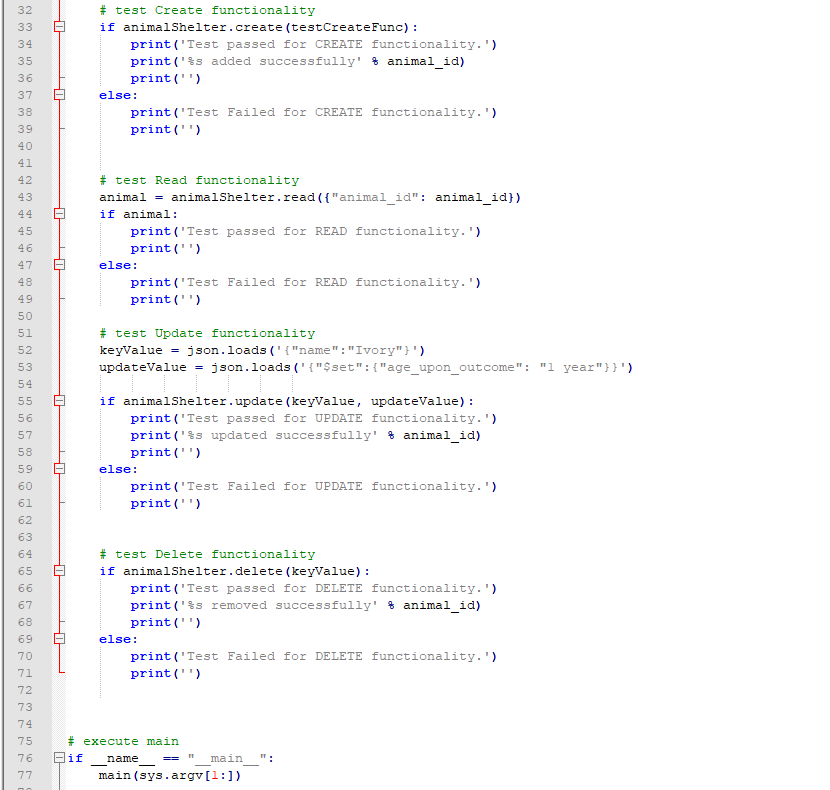

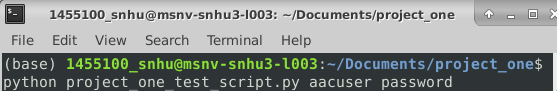

Project One for this class focuses on setting up a NoSQL database with MongoDB, creating a Python module to communicate with the database via the PyMongo distribution, and a test script to verify functionality of the module. The Python module includes logic to connect to the mongo database as well as logic for basic CRUD operations.

Below are screenshots of the test script execution and the corresponding results:

For my Project One source code, visit the following repository:

https://github.com/bkoconnell/CS340-final-project/tree/main/project_one/

For additional NoSQL assignment submissions, visit the following repository:

https://github.com/bkoconnell/CS340-final-project/tree/main/mongodb_assignments/

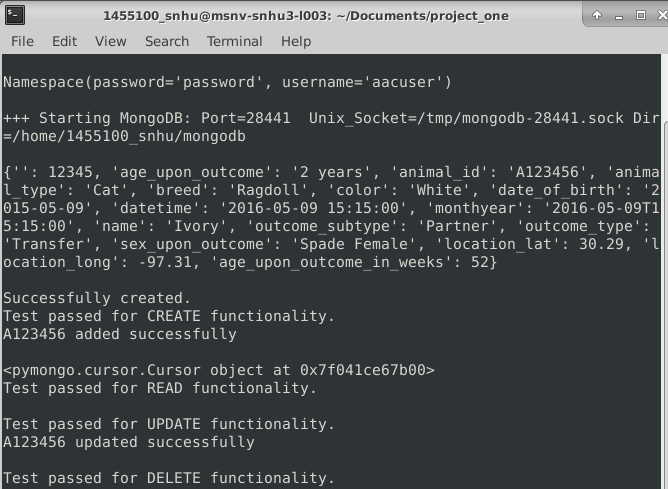

The 2nd project for this class completes the Python full stack by building a client-side (front-end) utilizing Python's DASH framework on top of Project One's bottom and middle stack. The DASH web application interacts with the CRUD Python Module, which is the middleware layer for the stacked development. It is the “glue” between the base level and the client level and contains the application's logic. The CRUD Python Module utilizes the PyMongo driver, which allows for a quick and reliable connection to the MongoDB server.

For the source code, please visit my Github repository. You can also view the CS 340 README.docx for more details regarding the project submission:

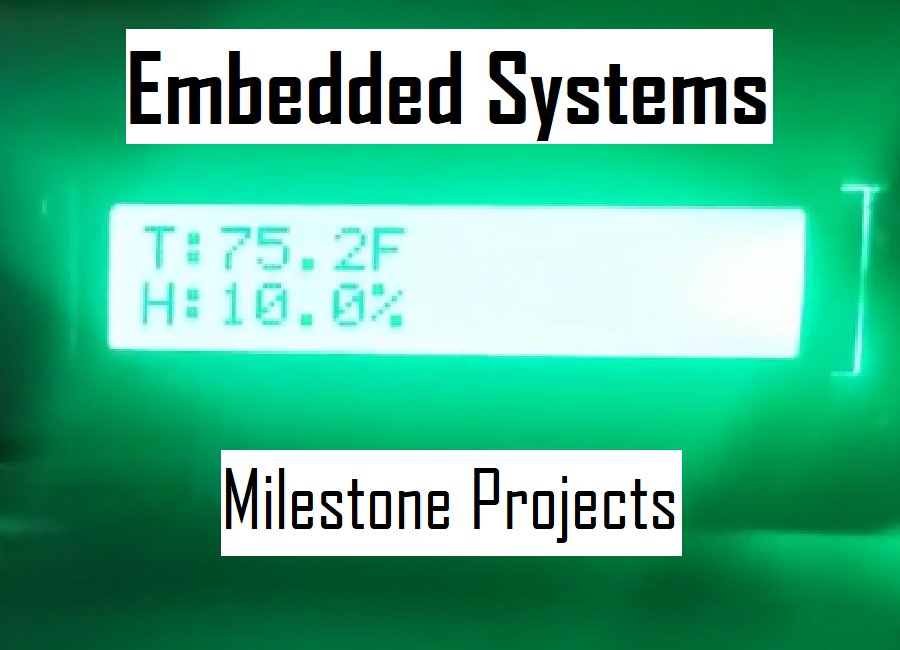

(embedded systems w/ raspberry pi4 and grovepi+)

In this class, we explored emerging systems, architectures, and technologies with a focus on hardware/software interface. Utilizing the Raspberry Pi embedded system and the GrovePi+ sensor kit, we wrote specific software to interface with the embedded system and control hardware and software components. The following milestone projects are the building blocks for the eventual final project. View repository for source code and Readme file:

https://github.com/bkoconnell/cs350-milestones/

This introductory milestone utilized a basic sound sensor from the GrovePi+ kit. An LED light was attached to the embedded system along with the sound sensor, and software was modified accordingly so that when sound exceeded a specified threshold it triggered the LED light to turn on. The specific milestone 1 modifications are commented in the source code.

Milestone 1 source code: https://github.com/bkoconnell/cs350-milestones/tree/main/milestone1_sound_sensor

Milestone 1 demo:

https://youtu.be/Qr349Zf1F6U

(ensure the video is set to the highest quality)

This milestone is the first building block for the final project. The Grove DHT (digital humidity and temperature) sensor is used to capture data readings for temperature and humidity of the environment. The software implements a while loop to read in the data from the sensor and converts the temperature reading to Fahrenheit. It then creates a JSON object for the data with specific formatting, then stores the object in a JSON database file (output as data.json). Additionally, the JSON data is output to the console for demonstration purposes.

The software also converts the data readings to string format then outputs the strings to an LCD screen. A sleep timer is set at the end of the loop, which determines how often the loop iterates. This controls the output frequency for both the JSON file and the LCD screen refresh. It's worth noting that each successive loop iteration appends the new data to the existing JSON file so that previous data is not overwritten. The JSON database file (data.json) for this milestone is located in the directory with the source code (link below). In the demo below, the Python script is run in the terminal and I place my fingers on the DHT sensor to manipulate the humidity and demonstrate functionality of the program.

Milestone 2 source code: https://github.com/bkoconnell/cs350-milestones/tree/main/milestone2_dht_sensor

Milestone 2 demo:

https://youtu.be/Epvm2UJLJi0

(ensure the video is set to the highest quality)

Another component of the final project is the light sensor, which is introduced in this milestone. The goal of this milestone is to simulate an interupt condition for the embedded system. The software is designed so that when the light reading crosses a specific threshold (when the environment's lighting is dark enough) the attached LED will turn on. The logic is written inside of a while loop and a sleep timer is set at the end of the loop, which determines how often the loop iterates. Each iteration executes the logic to read in the sensor data and check it against the threshold.

In the demo below, a blue LED and a light sensor are connected to the embedded system. When the program is initiated, the blue LED turns on because the light in the room is dark enough to trigger it. However, when I shine a flashlight onto the light sensor, the blue LED shuts off. This demonstrates the interupt condition set by the lighting threshold in the software. For each loop iteration, the sensor reading and calculated resistance are output to the console.

Milestone 3 source code: https://github.com/bkoconnell/cs350-milestones/tree/main/milestone3_light_sensor

Milestone 3 demo:

https://youtu.be/Vu1d4RBZwRk

(ensure the video is set to the highest quality)

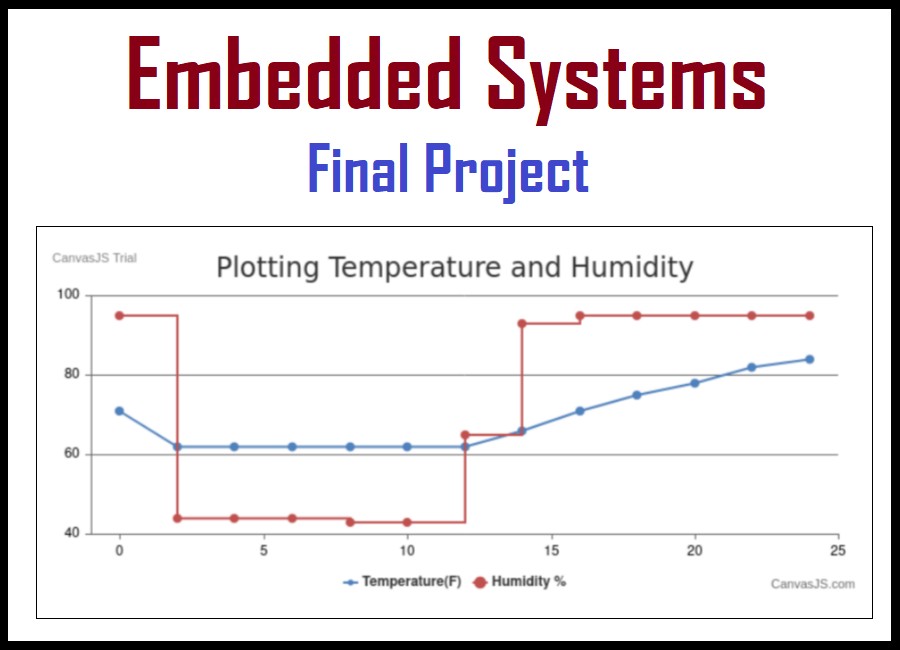

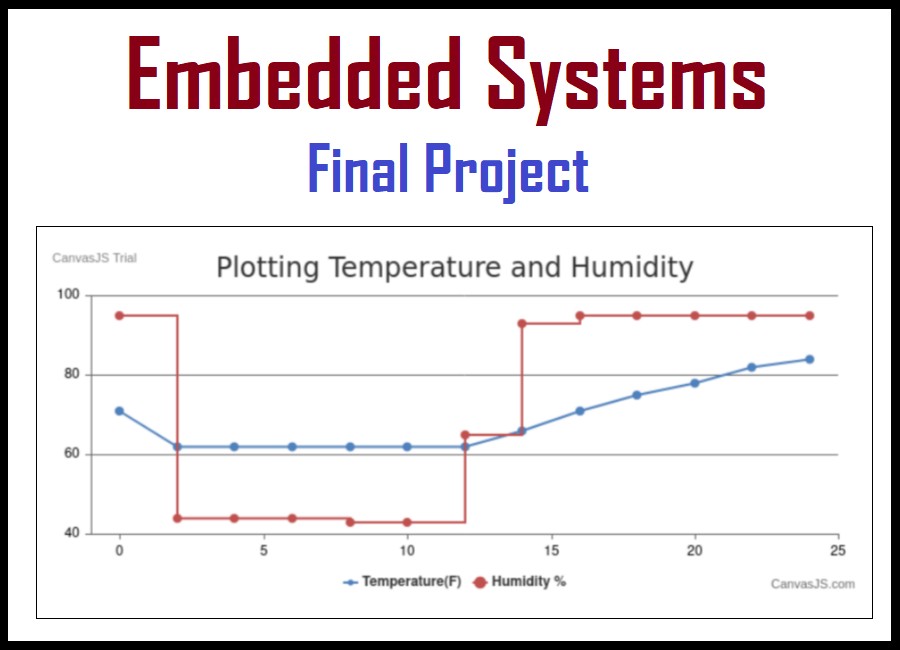

(embedded systems w/ raspberry pi4 and grovepi+)

In this class, we explored emerging systems, architectures, and technologies with a focus on hardware/software interface. Utilizing the Raspberry Pi embedded system and the GrovePi+ sensor kit, we wrote specific software to interface with the embedded system and control hardware and software components.

The following is the Milestone 4 project and the Final Project which build on the previous three milestones for this class. View repository for source code and Readme file:

https://github.com/bkoconnell/cs350-final-project/

Source code includes the data.json of the collected data (which is used to feed the web dashboard),the index.html (the web dashboard), and milestone4_v03.py (the python script for the dht weather station)

Milestone 4 source code: https://github.com/bkoconnell/cs350-final-project/tree/main/milestone4_dht_web_dashboard/

Milestone 4 demo:

https://youtu.be/W_rE-gQg8Z8

(ensure the video is set to the highest quality)

Source code includes data.json of the collected data, and the final.py python script for the DHT Weather Station prototype

Final Project source code: https://github.com/bkoconnell/cs350-final-project/tree/main/final_project_weather_station/

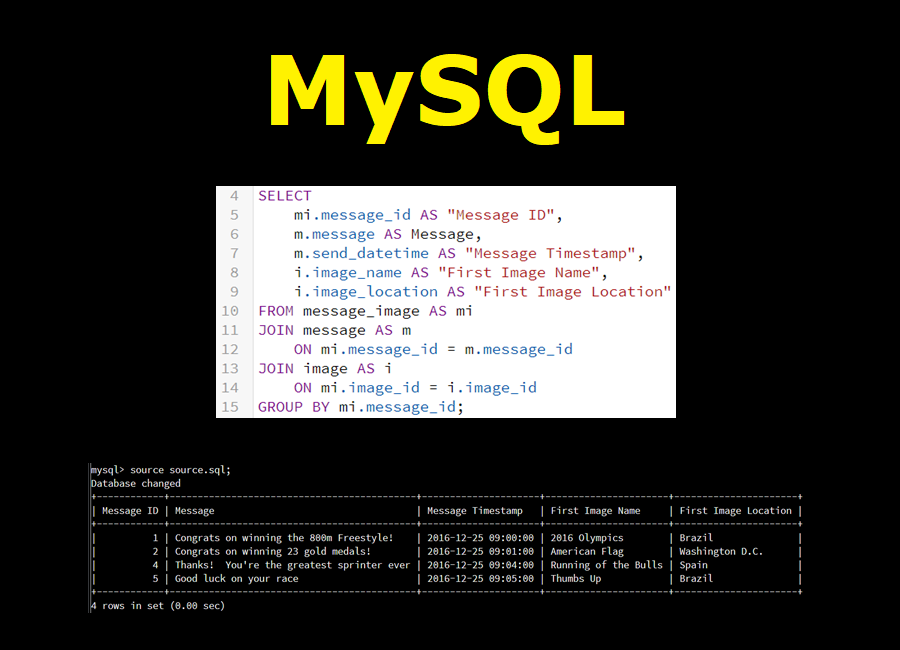

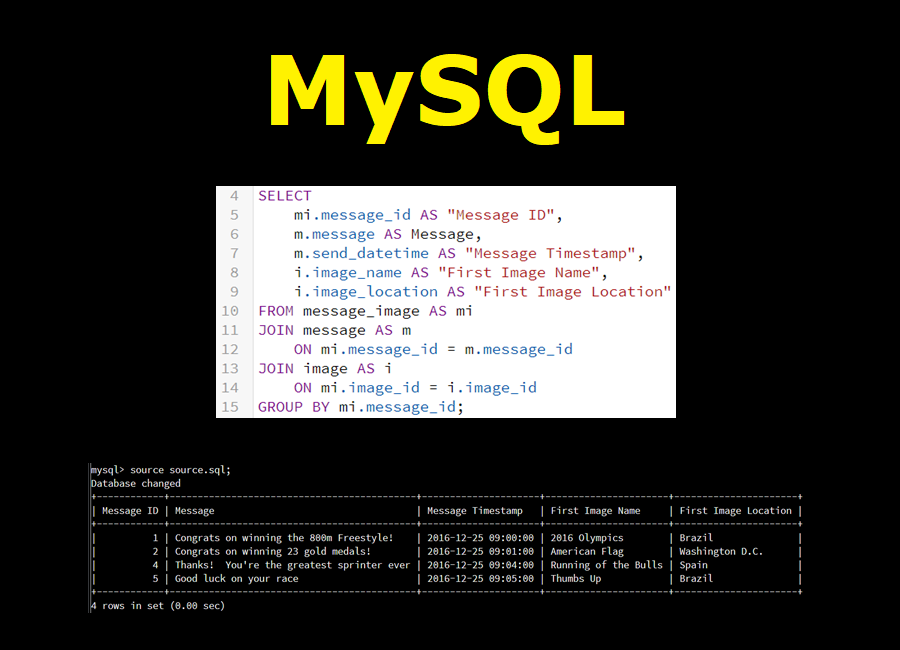

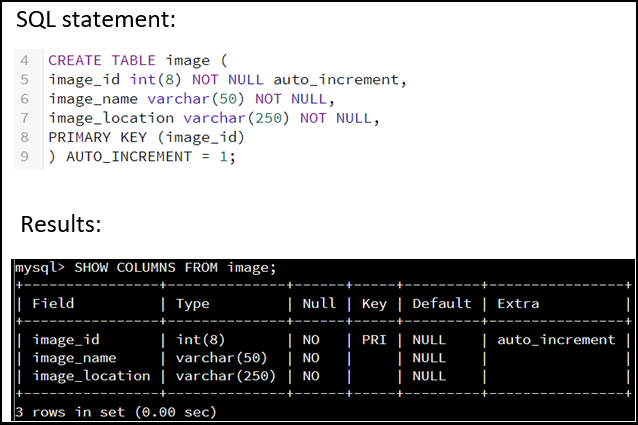

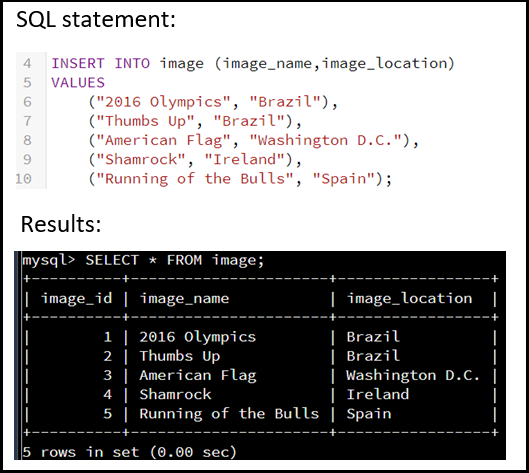

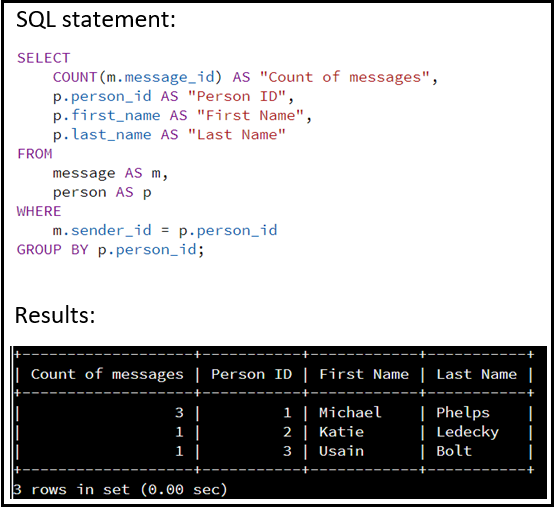

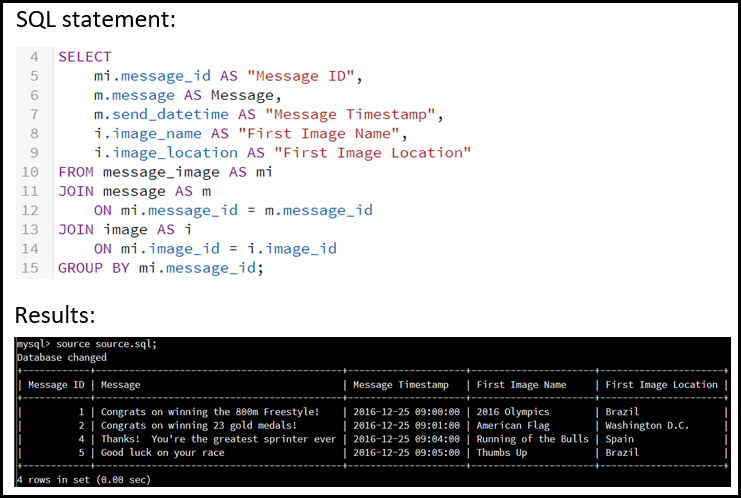

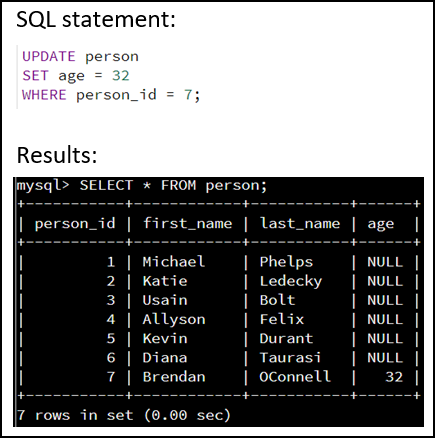

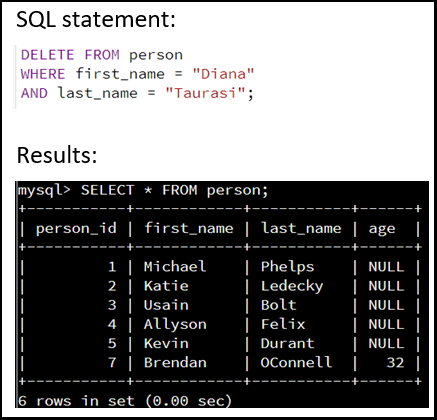

This introductory class for Structured Query Language used the RDBMS database program called "MySQL" in the CODIO learning environment. All basic CRUD operations were covered for creating, reading, updating, and deleting tables. Below are a few examples of SQL statements and the corresponding table results from my DAD-220 final project.

For my complete project with detailed explanations, please visit the

following repository:

(for screenshots, view the .docx file)